Here’s a story that evangelists for so-called AI (artificial intelligence) – or machine-learning (ML) – might prefer you didn’t dwell upon. It comes from the pages of Nature Machine Intelligence, as sober a journal as you could wish to find in a scholarly library. It stars four research scientists – Fabio Urbina, Filippa Lentzos, Cédric Invernizzi and Sean Ekins – who work for a pharmaceutical company building machine-learning systems for finding “new therapeutic inhibitors” – substances that interfere with a chemical reaction, growth or other biological activity involved in human diseases.

The essence of pharmaceutical research is drug discovery. It boils down to a search for molecules that may have therapeutic uses and, because there are billions of potential possibilities, it makes searching for needles in haystacks look like child’s play. Given that, the arrival of ML technology, enabling machines to search through billions of possibilities, was a dream come true and it is now embedded everywhere in the industry.

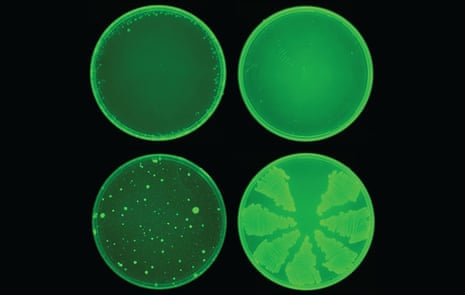

Here’s how it works, as described by the team who discovered halicin, a molecule that worked against the drug-resistant bacteria causing increasing difficulty in hospitals. “We trained a deep-learning model on a collection of [around] 2,500 molecules for those that inhibited the growth of E coli in vitro. This model learned the relationship between chemical structure and antibacterial activity in a manner that allowed us to show the model sets of chemicals it had never seen before and it could then make predictions about whether these new molecules… possessed antibacterial activity against E coli or not.”

Once trained, they then set the model to explore a different library of 6,000 molecules and it came up with one that had originally been considered only as an anti-diabetes possibility. But when it was then tested against dozens of the most problematic bacterial strains, it was found to work – and to have lower predicted toxicity in humans. In a nice touch, they christened it halicin after the AI in Kubrick’s 2001: A Space Odyssey.

This is the kind of work Urbina and his colleagues were doing in their lab – searching for molecules that met two criteria: positive therapeutic possibilities and low toxicity for humans. Their generative model penalised predicted toxicity and rewarded predicted therapeutic activity. Then they were invited to a conference by the Swiss Federal Institute for Nuclear, Biological and Chemical Protection on tech developments that might have implications for the Chemical/Biological Weapons Convention. The conference organisers wanted a paper on how ML could be misused.

“It’s something we never really thought about before,” recalled Urbina. “But it was just very easy to realise that, as we’re building these machine-learning models to get better and better at predicting toxicity in order to avoid toxicity, all we have to do is sort of flip the switch around and say, ‘You know, instead of going away from toxicity, what if we do go toward toxicity?’”

So they pulled the switch and in the process opened up a nightmarish prospect for humankind. In less than six hours, the model generated 40,000 molecules that scored within the threshold set by the researchers. The machine designed VX and many other known chemical warfare agents, separately confirmed with structures in public chemistry databases. Many new molecules were also designed that looked equally plausible, some of them predicted to be more toxic than publicly known chemical warfare agents. “This was unexpected,” the researchers wrote, “because the datasets we used for training the AI did not include these nerve agents… By inverting the use of our machine-learning models, we had transformed our innocuous generative model from a helpful tool of medicine to a generator of likely deadly molecules.”

Ponder this for a moment: some of the “discovered” molecules were potentially more toxic than the nerve agent VX, which is one of the most lethal compounds known. VX was developed by the UK’s Defence Science and Technology Lab (DSTL) in the early 1950s. It’s the kind of weapon that, previously, could be developed only by state-funded labs such as DSTL. But now a malignant geek with a rackful of graphics processor units and access to a molecular database might come up with something similar. And although some specialised knowledge of chemistry and toxicology would still be needed to convert a molecular structure into a viable weapon, we have now learned – as the researchers themselves acknowledge – that ML models “dramatically lower technical thresholds”.

Two things strike me about this story. The first is that the researchers had “never really thought about” the possible malignant uses of their technology. In that, they were probably typical of the legions of engineers who work on ML in industrial labs. The second is that, while ML clearly provides powerful augmentation of human capabilities – (power steering for the mind, as it were), whether this is good news for humanity depends on whose minds it is augmenting.

What I’ve been reading

Fake climate solutions

Aljazeera.com has published We Are “Greening” Ourselves to Extinction, a sharp essay by Vijay Kolinjivadi, of Antwerp University.

Time to grow up

Molly White is coruscating in her Substack newsletter, Sam Bankman-Fried Is Not a Child.

Funny money

Mihir A Desai has written an excellent New York Times piece, The Crypto Collapse and the End of Magical Thinking